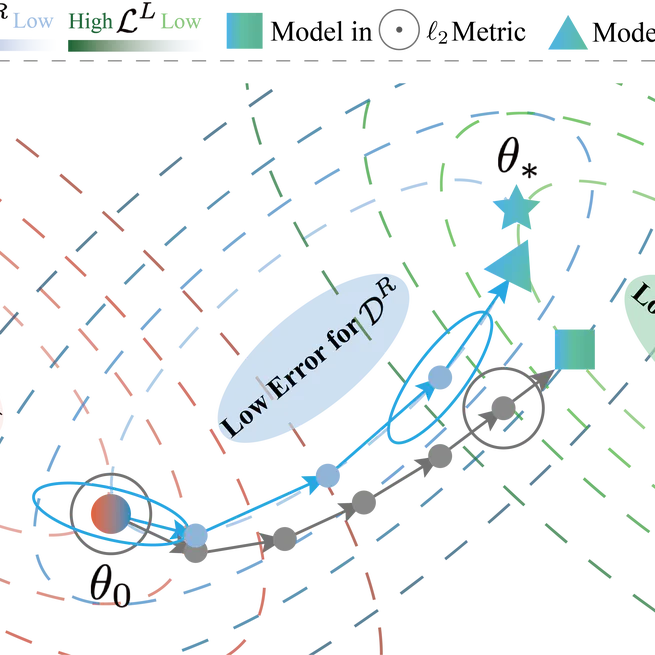

This paper proposes a unified gradient-based framework for task-agnostic continual learning-unlearning that models continual learning and machine unlearning within a single KL-divergence-based optimization objective, decomposes gradient updates into interpretable components, and introduces a Hessian-informed remain-preserved manifold and the UG-CLU algorithm to balance knowledge acquisition, targeted unlearning, and stability across benchmarks.

May 21, 2025

This paper theoretically analyzes that inter-class imbalance is entirely attributed to imbalanced class-priors, and the function learned from intra-class intrinsic distributions is the Bayes-optimal classifier, and presents that a simple adjustment of model logits during training can effectively resist prior class bias and pursue the corresponding Baye-optimum.

May 29, 2024