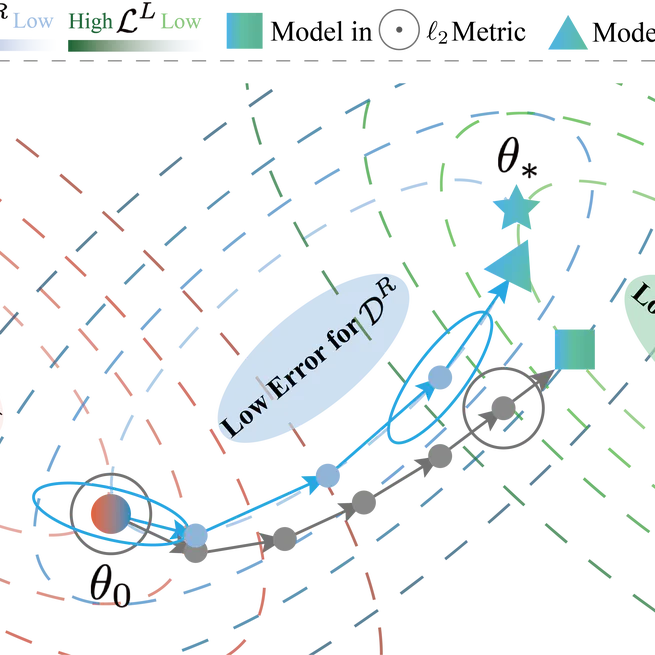

This paper proposes a unified gradient-based framework for task-agnostic continual learning-unlearning that models continual learning and machine unlearning within a single KL-divergence-based optimization objective, decomposes gradient updates into interpretable components, and introduces a Hessian-informed remain-preserved manifold and the UG-CLU algorithm to balance knowledge acquisition, targeted unlearning, and stability across benchmarks.

May 21, 2025

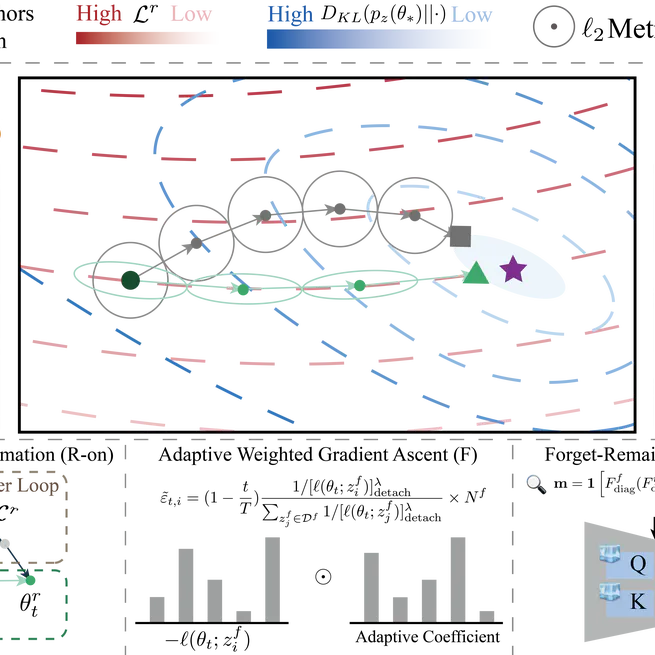

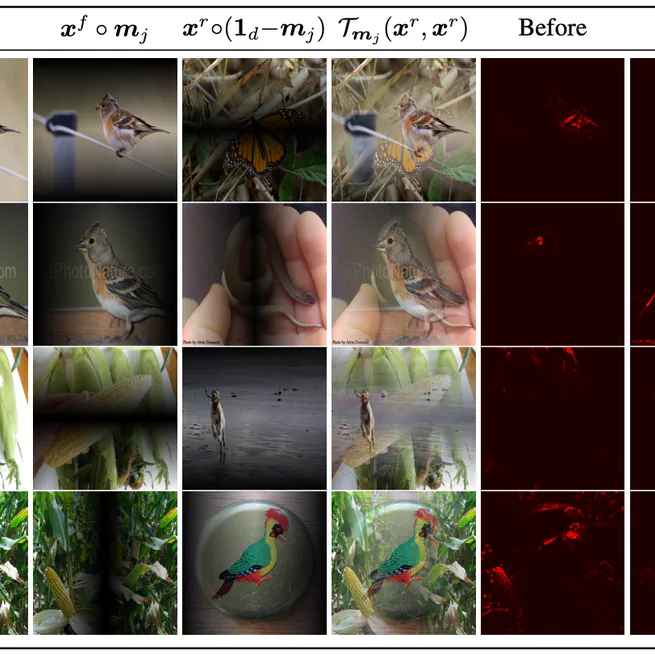

This work proposes a fast-slow parameter update strategy to implicitly approximate the up-to-date salient unlearning direction, free from specific modal constraints, and adaptable across computer vision unlearning tasks, including classification and generation.

Oct 7, 2024

This method substantially reduces the over-forgetting and leads to strong robustness to hyperparameters, making it a promising candidate for practical machine unlearning.

May 24, 2024

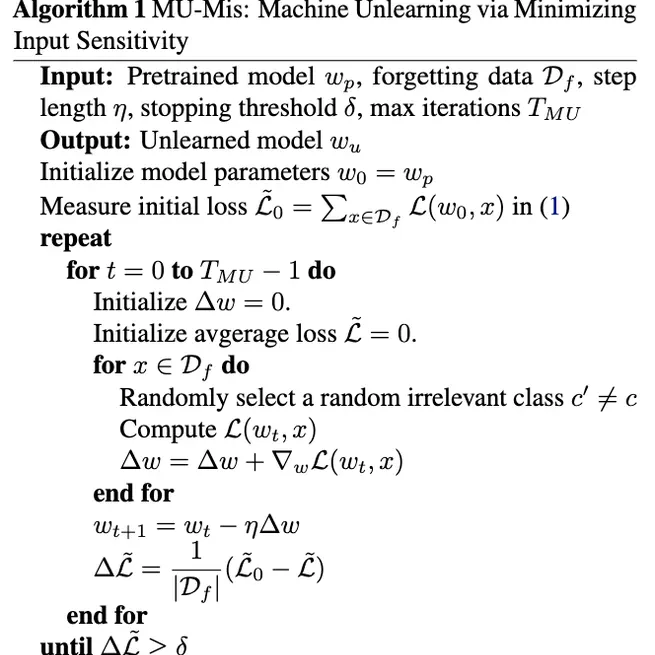

A novel method, namely MU-Mis (Machine Unlearning by Minimizing input sensitivity), to suppress the contribution of the forgetting data, which is the first time that a remaining-data-free method can outperform state-of-the-art unlearning methods that utilize the remaining data.

Feb 23, 2024